1 billion rows challenge in PostgreSQL and ClickHouse

- 6 minutes read - 1207 wordsLast week the good old Gunnar Morling launched an interesting challenge about ordering 1 billion rows in Java. Like my ex colleague and friend Robin Moffat, I’m not at all a Java expert, and while Robin used DuckDB to solve the challenge, I did the same with PostgreSQL and ClickHouse.

Alert: the following is NOT a benchmark! The test is done with default installations of both databases and NO optimization. The blog only shows the technical viability of a solution.

👉 Want a FREE PostgreSQL database?👈

🦀 Check Aiven’s FREE plans! 🦀

⚡️ Want to optimize your SQL query with AI? ⚡️

Check Aiven SQL Query Optimizer!

⚡️ Want a Fully PostgreSQL optimized Database? ⚡️

Check Aiven AI Database Optimizer!

Powered by EverSQL, it provides you index and SQL rewrite recommendations to take your database performance to the next level

Generate the data

I used pretty much the same steps as Robin to generate the data

- Forked the repository and cloned locally

- Installed Java21 to generate the data

sdk install java 21.0.1-zulu

sdk use java 21.0.1-zulu

- Built the data generator

./mvnw clean verify

- Generated some rows

./create_measurements.sh 1000000000

The above generates a file named measurements.txt with the 1 billion rows.

PostgreSQL

Setup local PostgreSQL

How do I load a local file into a PostgreSQL database? Well, it depends where the PostgreSQL database is. For my test I could have used Aiven but decided for a local installation on my Mac.

I installed PostgreSQL 16 with

brew install postgres@16

I can check how to start PostgreSQL locally with:

brew info postgresql@16

It tells me to execute:

LC_ALL="C" /usr/local/opt/postgresql@16/bin/postgres -D /usr/local/var/postgresql@16

Once run we should see the welcoming message LOG: database system is ready to accept connection

Load the data in PostgreSQL

With PostgreSQL running with all the default values (read more here) we are ready to upload the data.

We can connect to our PostgreSQL database with

psql postgres

Next step is to create a table containing our data with

CREATE UNLOGGED TABLE TEST(CITY TEXT, TEMPERATURE FLOAT);

We are using the UNLOGGED parameter to speed up the copy of the data, however we are assuming the risk of not writing any WAL log in the process… probably not the smartest idea if this is sensible data we’ll want to reuse later. We can load the data with the COPY command:

\copy TEST(CITY, TEMPERATURE) FROM 'measurements.txt' DELIMITER ';' CSV;

PostgreSQL Results

Following the same reasoning as Robin, I was able to get to a query like

WITH AGG AS(

SELECT city,

MIN(temperature) min_measure,

cast(AVG(temperature) AS DECIMAL(8,1)) mean_measure,

MAX(temperature) max_measure

FROM test

GROUP BY city

LIMIT 5)

SELECT STRING_AGG(CITY || '=' || CONCAT(min_measure,'/', mean_measure,'/', max_measure),', ' ORDER BY CITY)

FROM AGG

;

The main difference, compared to DuckDB is on the usage of the STRING_AGG function that allows me to directly create the string ordered by CITY with all the metrics.

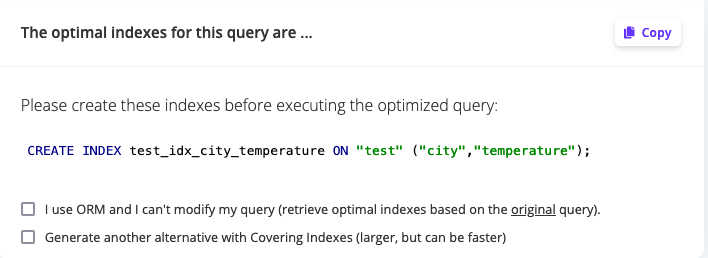

Note: If I use Aiven AI database optimizer to optimise the query, it’ll provide a suggestion to add an index on city and temperature:

CREATE INDEX test_idx_city_temperature ON "test" ("city","temperature");

PostgreSQL Timing

To get the timing I created a file called test.sql with the entire set of commands:

\timing

\o /tmp/output

-- Load the data

DROP TABLE TEST;

CREATE UNLOGGED TABLE TEST(CITY TEXT, TEMPERATURE FLOAT);

COPY TEST(CITY, TEMPERATURE) FROM '<PATH_TO_FILE>/measurements.txt' DELIMITER ';' CSV;

-- Run calculations

WITH AGG AS(

SELECT city,

MIN(temperature) min_measure,

cast(AVG(temperature) AS DECIMAL(8,1)) mean_measure,

MAX(temperature) max_measure

FROM test

GROUP BY city

)

SELECT STRING_AGG(CITY || '=' || CONCAT(min_measure,'/', mean_measure,'/', max_measure),', ' ORDER BY CITY)

FROM AGG

;

And then timed it with:

time psql postgres -f test.sql

The timing was a, not astonishing, 9m16.135s with the majority (6m:24.376s spent on copying the data) and 2m:51.443s on aggregating.

Edit Using PostgreSQL Foreign Data Wrapper (FDW)

As suggested on HN by using a File Foreign data wrapper we could eliminate the need of loading the data in the PostgreSQL table.

The test.sql has been changed to:

\timing

\o /tmp/output

-- Load the data

DROP TABLE TEST;

CREATE EXTENSION file_fdw;

CREATE SERVER stations FOREIGN DATA WRAPPER file_fdw;

CREATE FOREIGN TABLE TEST (

city text,

temperature float

) SERVER stations OPTIONS (filename '<PATH>/measurements.txt', format 'csv', delimiter ';');

-- Run calculations

WITH AGG AS(

SELECT city,

MIN(temperature) min_measure,

cast(AVG(temperature) AS DECIMAL(8,1)) mean_measure,

MAX(temperature) max_measure

FROM test

GROUP BY city

)

SELECT STRING_AGG(CITY || '=' || CONCAT(min_measure,'/', mean_measure,'/', max_measure),', ' ORDER BY CITY)

FROM AGG

;

Where the biggest change is that now the TEST table is defined as FOREIGN TABLE pointing directly to the measurements.txt file.

With The File FDW we removed the need of uploading the data to a table (that was costing us more than 6 minutes), but now the overall query takes 8m:24.572s. Overall, compared to the copy and query solution, we are 1 min faster.

ClickHouse

Setup local ClickHouse

How do I load a local file into a ClickHouse database? Well, it depends where the ClickHouse database is. For my test I could have used Aiven but decided for a local installation on my Mac.

I installed ClickHouse locally with (source)

curl https://clickhouse.com/ | sh

Query the data in ClickHouse

Compared to PostgreSQL, ClickHouse allows me to directly querying the CSV file without loading the data in a table.

I can query the measurement.txt file with:

./clickhouse local -q "SELECT c1 as city, c2 as measurement FROM file('measurements.txt', CSV) LIMIT 5" --format_csv_delimiter=";"

In the above I defined:

- a pointer to a file called

measurements.txtinCSVformat - a custom delimiter

; - the first column

c1ascity - the second column

c2as measurement

The input is correctly parsed.

Wellington 12

Riga 0.30000000000000004

Palermo 18.4

Sochi 10.8

Accra 11.7

ClickHouse Results

Following the same reasoning as Robin, I was able to get to a query like:

WITH AGG AS(

SELECT city,

cast(MIN(temperature) AS DECIMAL(8,1)) min_measure,

cast(AVG(temperature) AS DECIMAL(8,1)) mean_measure,

cast(MAX(temperature) AS DECIMAL(8,1)) max_measure

FROM (SELECT c1 as city, c2 as temperature FROM file('measurements.txt', CSV))

GROUP BY city

ORDER BY city

)

SELECT arrayStringConcat(groupArray(city || '=' || CONCAT(min_measure,'/', mean_measure,'/', max_measure)), ', ')

FROM AGG

The main difference, compared to the PostgreSQL solution is to use groupArray to create the list of cities (see the ORDER BY city in the first query to order them correctly), and the arrayStringConcat to concatenate the array elements in a string.

ClickHouse Timing

To get the timing I executed the following:

time ./clickhouse local -q """WITH AGG AS(

SELECT city,

cast(MIN(temperature) AS DECIMAL(8,1)) min_measure,

cast(AVG(temperature) AS DECIMAL(8,1)) mean_measure,

cast(MAX(temperature) AS DECIMAL(8,1)) max_measure

FROM (SELECT c1 as city, c2 as temperature FROM file('measurements.txt', CSV))

GROUP BY city

ORDER BY city

)

SELECT arrayStringConcat(groupArray(city || '=' || CONCAT(min_measure,'/', mean_measure,'/', max_measure)), ', ')

FROM AGG""" --format_csv_delimiter=";"

The result is 44.465s!

Conclusion

Both PostgreSQL and ClickHouse were able to complete the challenge. PostgreSQL initial solution had the limitiation of forcing the upload of data into a table that took most of the time, when using the file FDW the performances still weren’t great. ClickHouse, on the other side, was able to query directly the CSV and got much faster results, as expected from a database designed for analytics.

Alert: the above is NOT a benchmark! The test is done with default installations of both databases and NO optimization. The blog only shows the technical viability of the solution.